Nested Learning in Practice: Geometry of the Deep Optimizer, Multi‑Clock Transformers, and Reference Code

TL;DR The core idea is to achieve plasticity without amnesia (crucial for continual learning) by orchestrating different update frequencies for different components—a concept called a Multi-Clock System. It proposes a deep optimizer based on an inner regression objective, which geometrically acts as a right-side projection in input space to selectively reduce the weight matrix's sensitivity along the current input direction. The post includes practical recipes and reference code for applying NL to deep linear layers, convolutional networks, and Multi-Clock Transformers.

1. Why Nested Learning?

Standard deep learning draws a hard line between architecture (layers) and optimization (the training rule). Nested Learning (NL) observes both are stateful learners with update rules; the only principled difference is how often each state is updated—its frequency. Orchestrating different frequencies gives you plasticity without amnesia, the core problem in continual learning.

2. Formal setup

We use standard notation. Inputs . Let a module (a layer, an optimizer’s momentum, or an attention cache) be an associative memory that learns a mapping .

2.1 Associative memory

Definition 1 (Associative Memory) Given keys and values , an associative memory is an operator learned by minimizing an internal objective

This perspective applies uniformly to optimizers (they compress gradient streams), attention (key–value stores), and linear layers.

2.2 Update frequency and levels

Let one data‑point update be one unit of time. For any component , define its **update frequency**\[f_A \;=\; \text{\#updates of }A \text{ per unit time}.\]We write (“A is faster”) if (i) or (ii) but the state of at time depends on the state of at time . Sorting components by yields *levels*; higher level ⇒ lower frequency.

2.3 Deep optimizer (L2 regression view)

For a linear map , the local “surprise” is . The usual outer gradient is . NL proposes an inner L2 objective that regresses to :

One gradient step of size gives

Combining this with an outer step yields\

(For mini‑batches, replace with the Gram matrix .)

2.4 Continuum Memory System (CMS)

CMS composes memories with distinct clocks:

With per‑level accumulation, update only when the period divides the global step.

Figure 1. Multi‑time‑scale update schedule for three levels. Only due levels step; others accumulate gradients.

3. Geometry of the deep‑optimizer projection

The transformation is a right‑side projection in input space:

Figure 2. Outputs (solid) vs (dashed) for unit directions . The largest change is along ; the thick ray is .

Figure 3. Update magnitude vs . Maximal when , minimal when .

Figure 4. Batched projection with : unit circle maps to an ellipse. Labels show shrink factors along Gram eigenvectors.

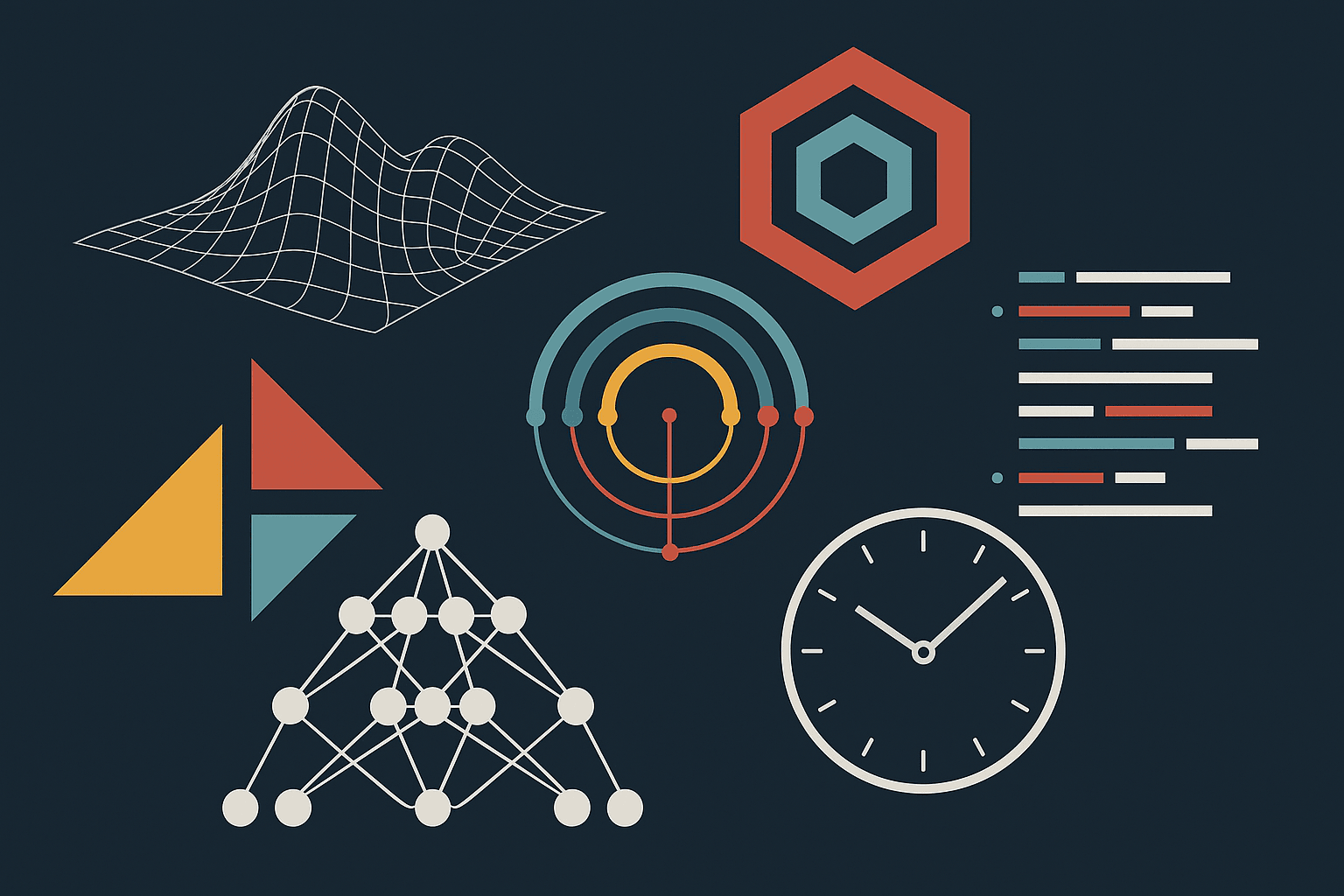

4. Multi-Clock Training Sequence

The figure below illustrates the interaction between model blocks, accumulators, and optimizers during a training step. Each block has its own clock; at each global step, we accumulate gradients, then apply updates only for blocks whose period divides the step counter.

Figure 6 Multi‑clock training sequence showing gradient accumulation, period checking, and update scheduling. At step t=127, all three blocks update since their periods (1, 16, 128) all divide 128 evenly.

5. Implementation (runnable code)

This section includes complete, ready‑to‑run modules with detailed comments.

Listing 1 — Minimal reference (DeepL2GD + CMS + toy continual learning)

See `nested_learning_minimal.py` in the accompanying files for the fully-commented version. The code demonstrates:

- NLLinear: Linear layers with cached inputs for Gram-matrix computation

- DeepL2GD: Applies projection before base optimizer update

- CMSSequential: Multi-timescale learner with per-block periods

- NLTrainer: Trains on sequential tasks and measures retention/plasticity

Key experiment: train on Task A, then Task B. Report accuracy on both tasks to measure how well the multi-clock system balances new learning with memory of past tasks.

Listing 2 — EMA‑smoothed deep optimizer

See `deep_l2gd_ema.py` for the fully-commented version. **Key difference from DeepL2GD**:- Maintains an exponential moving average of Gram matrices- Updates: - Smooths out noise from small or biased minibatches- Useful for noisy continual learning scenarios where gradient directions fluctuate

Listing 3 — Convolutional variant (channel‑covariance approximation)

See `nl_conv.py` for the fully-commented version. Applies Nested Learning to Conv2d:- Channel-level covariance instead of full spatial Gram matrix (for efficiency)- Input reshape: to compute channel statistics- Weight averaging across kernel spatial dimensions reduces dimensionality- Scaling preserves average kernel magnitude during projection

Listing 4 — Tiny Transformer wired with clocks

See `nl_transformer_tiny.py` for the fully-commented version. Demonstrates multi-clock Transformer:

- MHA: Q/K/V and O projections with NLLinear input caching

- Block: Attention + FFN with residuals

- wire_clocks(): Assigns C=1 (fast) to attention heads, C=8 to O projection, C=64 (slow) to FFN

- step_with_clocks(): Applies updates based on period divisibility

- make_synth_bigrams(): Two bigram regimes for continual learning Trains on Task A, transitions to Task B with distribution shift

Figure 5. Multi‑clock schedule: Q/K/V update every step (C=1), output projection slower (C=8), FFN slowest (C=64).

6. Practical recipes

- Clock assignment: fast for sequence‑local paths (Q/K/V, head), slower for knowledge‑heavy paths (O, FFN). Geometric periods : are a robust default.

- Deep‑optimizer strength: to start. Larger values risk underfitting dominant directions.

- EMA vs per‑batch: use EMA when minibatches are noisy or small; per‑batch when you want rapid adaptation.

7. Reproducibility

- Minimal run: `python nested_learning_minimal.py` (reports Task‑A/B accuracies before/after sequential training).

- Transformer demo: `python nl_transformer_tiny.py` (synthetic bigram regimes to simulate distribution shift).

- Conv projection: import `NLConv2d` and call `conv_channel_projection_step` at due steps.

Note: The source code for this blog is available at: NestedLearning

8. References

- Google Research Blog — “Introducing Nested Learning: A new ML paradigm for continual learning” (Nov 7, 2025).

- NeurIPS 2025 — “Nested Learning: The Illusion of Deep Learning Architectures”, Behrouz, Razaviyayn, Zhong, Mirrokni. PDF

- Titans — “Titans: Learning to Memorize at Test Time”, Behrouz, Zhong, Mirrokni.

About Pronam Chatterjee

A visionary with 25 years of technical leadership under his belt, Pronam isn’t just ahead of the curve; he’s redefining it. His expertise extends beyond the technical, making him a sought-after speaker and published thought leader.

Whether strategizing the next technology and data innovation or his next chess move, Pronam thrives on pushing boundaries. He is a father of two loving daughters and a Golden Retriever.

With a blend of brilliance, vision, and genuine connection, Pronam is more than a leader; he’s an architect of the future, building something extraordinary

Related Posts

View all postsA Recommender system can be distinguished from an information retrieval system by the semantics of its user interaction.

Warm pools eliminate most cold starts; autoscaling is token-aware. Sub-300 ms p95 is theoretically achievable for short prompts on Gemma 2B with a warm replica.

Most modern data stacks are expensive, cloud-hosted legacy systems. BluePi sees Airflow DAGs become spaghetti, BigQuery treated as infinite, and teams drowning in YAML instead of delivering insights.

Discover how BluePi is using GPU support for Cloud Run, to build faster, cheaper, and more flexible LLMOps and generative-AI solutions.

This is the Data Infrastructure Paradox. As practitioners in the trenches, we feel the growing complexity, the rising costs, and the persistent gap between the data we have and the value we need